In the ever-evolving landscape of digital experiences, applications serve as the digital face of a business. For customers and employees alike, an elevated experience is about immediacy and instant availability. It is no wonder that organizations are heavily invested in keeping applications available, scalable and more secure, especially when attracting several users.

As a result, servers that host these applications must be available, scalable, agile and responsive.

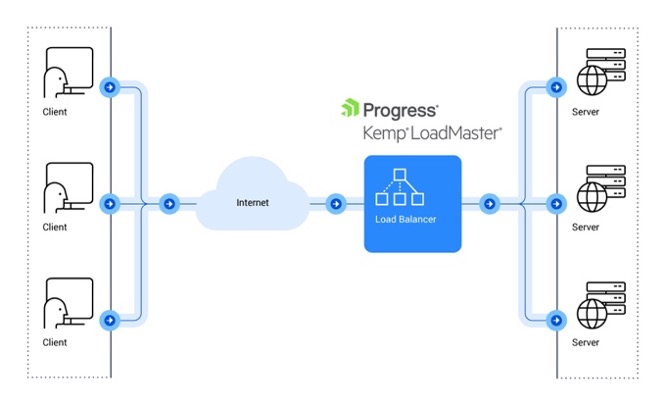

Load balancing is a technology that helps organizations maintain high availability and performance for all their applications.

What is a Load Balancer?

In the context of computing systems, we can broadly define load balancing as “a process of distributing a set of tasks over a set of resources (computing units).”

Extending this to an IT network ecosystem, we can refine the definition and state that load balancing is the technique of efficient traffic distribution across a pool of resources that host or support an application. Any device, hardware or software that performs this activity as its primary function is called a load balancer.

A slightly more technical definition would be to define it as a ‘reverse proxy.’ This means the load balancer presents itself with a Virtual IP (VIP) address to the client. The client considers this IP address to be the server IP. In other words, the client assumes the load balancer's IP address to be the server's IP address. Hence the name ‘reverse proxy.' This technology is known as Server Load Balancing (SLB). SLB is designed for pools of application servers within a single site or LAN.

Why Do We Need a Load Balancer?

Let’s say you have set up a fast-food takeout business. It’s new and relatively small. You have one person operating a single counter to serve your customers, but you usually do a good job with your takeout business. You suddenly have a large influx of customers. Although you are still whipping up some good delicacies, your customers are getting impatient in longer queues. You increase the number of counters, but you notice that efficiency at each counter varies and your customers are not served on time. You might then want to employ a manager who guides your customers to the correct counters based on their needs. The manager here is the ‘load balancer.’

Organizations host applications on several servers so that no server must bear the brunt of catering to multiple client requests. However, without an efficient traffic distribution system, traffic can be directed in a way that puts the entire load on a few servers and leaves others idle. As a result, the response time gets longer and customers are left waiting for applications to be available. In another scenario, one or many servers could experience downtime, during which traffic needs to be distributed to the available servers.

Like the manager in our analogy, who acts as a ‘traffic cop’ for queues, load balancers help optimize server performance by distributing the traffic between the servers to efficiently utilize resources.

Load Balancing Benefits

In scenarios where multiple applications are in play, servers are many, and the overall IT ecosystem is complex, a load balancer can bring benefits like continued availability and scalability.

Increased Availability

One primary load balancing benefit is keeping applications available when and where required. They redirect traffic to available servers during failures on other servers, improving a user’s access.

Increased Scalability

With increased usage, organizations tend to increase the number of servers. A load balancer can verify if a system can handle increased workloads as the usage grows and expands.

Increased Efficiency

Load balancers distribute traffic evenly across servers so the resources on the servers are used efficiently. This, in turn, increases the efficacy of the entire application ecosystem.

Increased Security

Load balancers with built-in security features can help organizations protect their systems and data. Network security with load balancers is enhanced with features such as encryption, such as TLS 3.1, authentication mechanisms and access control techniques to check the data and help block malicious content.

Now that we understand the need for load balancers, let’s learn some basic principles about them.

Load Balancing Operating Principles

Load balancers should follow a few basic tenets or what we call load balancing principles for them to work efficiently:

- Load balancers must employ different techniques for different applications.

- Load balancers must support security protocols.

- They should use the right kind and mix of algorithms.

- Load balancers must also constantly monitor the health and status of each server.

Load balancers make the critical decision to route traffic to the correct server. However, no single technique fits all applications. They differ depending on the type and nature of the application. For example, load balancers can make decisions based on the network protocol (IP, TCP, UDP, FTP) at Layer 3/4 of the OSI stack. On the other hand, the routing could be based on the complexity of the applications (such as directing traffic to servers that can handle video streams). The tasks it performs, the algorithm it uses and the network layer at which it works all depend on the nature of the application.

Load balancers must fully support encryption protocols such as TLS 3.1, which enables them to decrypt data packets, inspect the data and avoid malicious content.

Load balancers use intelligent algorithms such as Round Robin, Least Connection, Chained Failover and Weighted Response Time to distribute incoming client requests to a pool of application servers. Do note that load balancers do not utilize just one algorithm; they use an optimal mix of algorithms to make their routing decisions.

One critical load balancing principle is that they must constantly monitor the health and status of their servers to prevent directing requests to servers that are either down or busy with ongoing tasks. This reduces latency and keeps applications available for users when required.

Load Balancing Algorithms

As stated in the load balancing principles, the algorithms that load balancers use depend on the service type and application they cater to. Sometimes, it could even employ an optimal mix of algorithms that serve its purpose best. Load balancers typically use the following algorithms:

- Least Connection

- Round Robin

- Weighted Least Connection

- Weighted Round Robin

- DNS Round Robin

- IP Hash

- Resource-based

- Weighted Response Time

- URL Hash

Weighted Round Robin – The network administrator assigns a static numerical weight to each server in the pool. Usually, the weight is decided by the processing capacity of the server. For instance, the most efficient and powerful server gets a weight of 100, while a server with half as much processing capacity could get 50. Similarly, each server in the pool is assigned a weight based on its processing capacity. The algorithm could potentially assign double the number of service requests to the first server than the second server. This method can show that the pool's most efficient and powerful server could receive a higher share of requests than less efficient servers.

Least Connection - It considers the current server load when distributing requests. Instead of simply rotating requests among servers, it sends the current request to the server servicing the few active connections.

Load Balancing Features

We talked about the scheduling feature and how it uses algorithms to do so. Let’s look at a few other load balancing features.

- Routing Traffic Based on the Layer: Load balancers can make decisions based on the network protocol (IP, TCP, UDP, FTP) at Layer 3/4 of the OSI stack. For example, it would offer server load balancing (SLB) for TCP/UDP protocols. Similarly, Layer 7 content switching (also known as URL rewriting) uses information from the application layer of the network stack to augment the lower level of network switching operations. Information such as the target services and the data in the packets it is intended for can be used by load balancers to route the packets in real time to the server best suited to process them.

- High Availability: An essential load balancing feature that sustains high traffic and failover when the primary infrastructure is not functional. It confirms if the server farm is available, even when the primary load balancer fails. It implements this by re-routing that traffic to the backup load balancer/s, providing users with a seamless experience and unnoticeable downtime.

- Performing Server Functions on the Load Balancer: Latency can be brought down by offloading functions primarily performed by the server to the load balancer. For instance, TLS/SSL offloading, wherein load balancers can offload the burden of decrypting and encrypting traffic transmitted via SSL. This is referred to as SSL termination. Similarly, transparent caching for HTTP/HTTPS at the load balancer avoids an extra hop to the server. On the same note, certain load balancers can compress static and dynamic HTTP/HTTPS content, making the packets lighter and traffic smoother.

- Health Checks: Constantly monitoring the health and status of each server in the pool prevents requests from being sent to servers that are too busy or offline. Again, the health check employed might differ from layer to layer. For example, LoadMaster uses layer 4 health checks for TCP services and layer 3 health checks for ICMP Ping for both TCP and UDP Virtual Services.

- Session Persistence: Enable one-to-one mapping so all requests from a single logical client are directed toward a single server. This is very useful for applications that need to store session-specific data and maintain its state. It can help boost performance and prevent data inconsistency in case of any disruption.

Application Delivery Controller

Load balancers with features such as the above and more do not simply route traffic. Instead, they act as Application Delivery Controllers (ADC).

Application Delivery Controllers are the next generation of load balancers and are typically located between the firewall/router and the web server farm. In addition to providing Layer 4 load balancing, ADCs can manage Layer 7 for content switching and provide SSL offload and acceleration. They tend to offer more advanced features such as content redirection as well as server health monitoring. They are also known as web switches, URL switches, web content switches, content switches and Layer 7 switches.

Security Considerations in Load Balancing

All internet traffic is encrypted. It is only natural that load balancers, the intermediary between clients and servers, must be able to encrypt and decrypt traffic and support TLS 1.3. However, inbuilt features that help protect data from common vulnerabilities are an added advantage. For instance, LoadMaster has a feature called Web Application Firmware that supports the creation of per-application security profiles to enforce source location-level filtering, adopt pre-integrated rulesets for common attack vectors and provide custom security rules support. Other features such as Security Certificate Management, authentication methodologies and SSO are essential for load balancers to perform their functions more efficiently.

Best Practices for Load Balancing

While practitioners must adhere to the basic load balancing principles, they must also follow certain best practices while implementing load balancers.

- Practitioners need to assess their needs, like analyzing the traffic patterns, expected loads and critical applications to determine the best solution.

- Practitioners should select suitable algorithms based on their application needs and server characteristics.

- One should also continuously monitor performance metrics and implement proactive measures to address potential issues before they cause downtime or performance bottlenecks.

- Practitioners must implement failover mechanisms and security best practices to maximize availability and security protection.

How Organizations Around the World Have Implemented Load Balancing

Music to the Ears!

The Elbphilharmonie, a state-of-the-art concert hall in Hamburg, Germany, can house around 2600 seats. Since its grand opening in January 2017, it has sold around 1,300,0000 tickets in its opening season itself. However, providing guests with a smooth online ticketing experience during high demand was challenging. Medialine AG, which hosted their servers, had a target of not losing a single event-related inquiry from a potential customer. To provide a non-disruptive ticketing experience for online customers, they ordered two Virtual LoadMaster 5000s, capable of individually delivering 5000 Mbps of throughput and 10,000 SSL TPS. The VLMs were deployed within Medialine's infrastructure, consisting of three physical servers, all virtualized with Hyper-V. Running on the physical servers and a redundant storage environment were 80 virtual machines. Since deploying the pair of VLMs, they have distributed peak usage inquiries among the available scaled virtual servers of the website, ticketing system and integrated checkouts, thus maintaining high availability and providing superior customer experiences.

It's All Central

Wowrack, a managed cloud service provider with data centers across various countries, wanted to enhance the performance and security of the applications hosted in its infrastructure and cloud services to offer better customer service. A key challenge was to centrally manage the application delivery infrastructure to plan, deploy, monitor and report on its applications' performance, utilization and security. They deployed Kemp LoadMaster as virtual machines. The Kemp RESTful API allowed the Wowrack team to automate and integrate the deployment process into workflows. Also, they deployed Kemp 360 Central to streamline management and to report on their application delivery infrastructure. Wowrack now has a load-balancing solution that allows specific configurations for each application they host for clients. They see a great application experience as a result, which is driving high customer satisfaction. Plus, cost savings are significant due to the flexible Kemp metered licensing model. A benefit that Wowrack passes on to their customers.

Conclusion

With evolution, load balancers are poised to incorporate advanced algorithms for dynamic traffic distribution and predictive scaling based on user behavior. They are also well-placed to adopt enhanced security measures against evolving cyber threats, maintaining optimal performance and reliability in increasingly complex digital environments.

In conclusion, load balancers equalize traffic in the client-server world. Without proper optimization and routing techniques, servers face larger downtimes and user experience degrades. Load balancers, by optimizing the traffic flow, allow users to access services consistently without disruption— keeping applications accessible, available and scalable.

To get a hands-on experience on load balancers, download the free LoadMaster today!

For advanced features like WAF, learn about LoadMaster here.